Over at StorageNerve, and on Twitter, Devang Panchigar has been asking Is Storage Tiering ILM or a subset of ILM, but where is ILM? I think it’s an important question with some interesting answers.

Devang starts with defining ILM from a storage perspective:

1) A user or an application creates data and possibly over time that data is modified.

2) The data needs to be stored and possibly be protected through RAID, snaps, clones, replication and backups.

3) The data now needs to be archived as it gets old, and retention policies & laws kick in.

4) The data needs to be search-able and retrievable NOW.

5) Finally the data needs to be deleted.

I agree with items 1, 3, 4 and 5 – as per previous posts, for what it’s worth, I believe that 2 belongs to a sister activity which I define as Information Lifecycle Protection (ILP) – something that Devang acknowledges as an alternative theory. (I liken the logic to separation between ILM and ILP to that between operational production servers and support production servers.)

The above list, for what it’s worth, is actually a fairly astute/accurate summary of the involvement of the storage industry thus far in ILM. Devang rightly points out that Storage Tiering (migrating data between different speed/capacity/cost storage based on usage, etc.), doesn’t address all of the above points – in particular, data creation and data deletion. That’s certainly true.

What’s missing from ILM from a storage perspective are the components that storage can only peripherally control. Perhaps that’s not entirely accurate – the storage industry can certainly participate in the remaining components (indeed, particularly in NAS systems it’s absolutely necessary, as a prime example) – but it’s more than just the storage industry. It’s operating system vendors. It’s application vendors. It’s database vendors. It is, quite frankly, the whole kit and caboodle.

What’s missing in the storage-centric approach to ILM is identity management – or to be more accurate in this context, identity management systems. The brief outline of identity management is that it’s about moving access control and content control out of the hands of the system, application and database administrators, and into the hands of human resources/corporate management. So a system administrator could have total systems access over an entire host and all its data but not be able to open files that (from a corporate management perspective) they have no right to access. A database administrator can fully control the corporate database, but can’t access commercially sensitive or staff salary details, etc.

Most typically though, it’s about corporate roles, as defined in human resources, being reflected from the ground up in system access options. That is, human resources, when they setup a new employee as having a particular role within the organisation (e.g., “personal assistant”), triggering the appropriate workflows to setup that person’s accounts and access privileges for IT systems as well.

If you think that’s insane, you probably don’t appreciate the purpose of it. System/app/database administrators I talk to about identity management frequently raise trust (or the perceived lack thereof) involved in such systems. I.e., they think that if the company they work for wants to implement identity management they don’t trust the people who are tasked with protecting the systems. I won’t lie, I think in a very small number of instances, this may be the case. Maybe 1%, maybe as high as 2%. But let’s look at the bigger picture here – we, as system/application/database administrators currently have access to such data not because we should have access to such data but because until recently there’s been very few options in place to limit data access to only those who, from a corporate governance perspective, should have access to that data. As such, most system/app/database administrators are highly ethical – they know that being able to access data doesn’t equate to actually accessing that data. (Case in point: as the engineering manager and sysadmin at my last job, if I’d been less ethical, I would have seen the writing on the wall long before the company fell down under financial stresses around my ears!)

Trust doesn’t wash in legal proceedings. Trust doesn’t wash in financial auditing. Particularly in situations where accurate logs aren’t maintained in an appropriately secured manner to prove that person A didn’t access data X. The fact that the system was designed to permit A to access X (even as part of A’s job) is in some financial, legal and data sensitivity areas, significant cause for concern.

Returning to the primary point though, it’s about ensuring that the people who have authority over someone’s role within a company (human resources/management) having control over the the processes that configure the access permissions that person has. It’s also about making sure that those work flows are properly configured and automated so there’s no room for error.

So what’s missing – or what’s only at the barest starting point, is the integration of identity/access control with ILM (including storage tiering) and ILP. This, as you can imagine, is not an easy task. Hell, it’s not even a hard task – it’s a monumentally difficult task. It involves a level of cooperation and coordination between different technical tiers (storage, backup, operating systems, applications) that we rarely, if ever see beyond the basic “must all work together or else it will just spend all the time crashing” perspective.

That’s the bit that gives the extra components – control over content creation and destruction. The storage industry on its own does not have the correct levels of exposure to an organisation in order to provide this functionality of ILM. Nor do the operating system vendors. Nor do the database vendors or the application vendors – they all have to work together to provide a total solution on this front.

I think this answers (indirectly) Devang’s question/comment on why storage vendors, and indeed, most of the storage industry, has stopped talking about ILM – the easy parts are well established, but the hard parts are only in their infancy. We are after all seeing some very early processes around integrating identity management and ILM/ILP. For instance, key management on backups, if handled correctly, can allow for situations where backup administrators can’t by themselves perform the recovery of sensitive systems or data – it requires corporate permissions (e.g., the input of a data access key by someone in HR, etc.) Various operating systems and databases/applications are now providing hooks for identity management (to name just one, here’s Oracle’s details on it.)

So no, I think we can confidently say that storage tiering in and of itself is not the answer to ILM. As to why the storage industry has for the most part stopped talking about ILM, we’re left with one of two choices – it’s hard enough that they don’t want to progress it further, or it’s sufficiently commercially sensitive that it’s not something discussed without the strongest of NDAs.

We’ve seen in the past that the storage industry can cooperate on shared formats and standards. We wouldn’t be in the era of pervasive storage we currently are without that cooperation. Fibre-channel, SCSI, iSCSI, FCoE, NDMP, etc., are proof positive that cooperation is possible. What’s different this time is the cooperation extends over a much larger realm to also encompass operating systems, applications, databases, etc., as well as all the storage components in ILM and ILP. (It makes backups seem to have a small footprint, and backups are amongst the most pervasive of technologies you can deploy within an enterprise environment.)

So we can hope that the reason we’re not hearing a lot of talk about ILM any more is that all the interested parties are either working on this level of integration, or even making the appropriate preparations themselves in order to start working together on this level of integration.

Fingers crossed people, but don’t hold your breath – no matter how closely they’re talking, it’s a long way off.

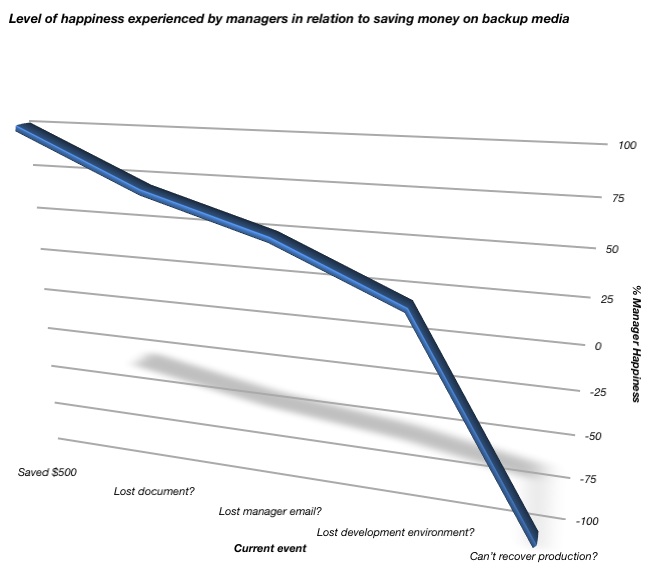

You see, it’s easy to be happy about saving a few dollars here and there on backup media in the here and now, when your backups are running and you don’t need to recover.

You see, it’s easy to be happy about saving a few dollars here and there on backup media in the here and now, when your backups are running and you don’t need to recover. The first thing this should point out to you is that administrator zones could, if desired, overlap. For instance, in the above diagram we have:

The first thing this should point out to you is that administrator zones could, if desired, overlap. For instance, in the above diagram we have: