Bundled with NetWorker for some time now has been the peripheral product, Legato License Manager (LLM). (Now normally just referred to as “License Manager”.)

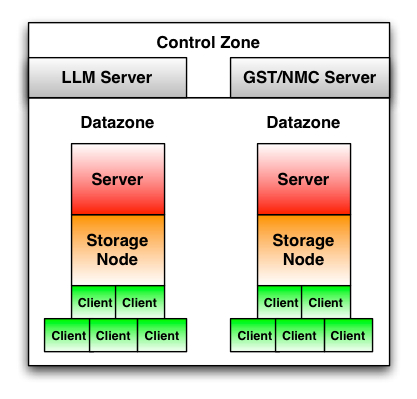

If you’ve never used LLM, you may wonder what use it serves. But to do that, we first need to look at the control zone, that region of space that encompasses one or more NetWorker datazones. This might resemble the following:

Both the NetWorker Management Console (NMC) server (typically the “gst” processes) and LLM reside in the control zone – that is, they exist to service multiple datazones. However, in the same way that many sites end up running the datazone and control zone (via NMC/GST) on the same backup server, there’s nothing preventing you from using LLM to manage licenses separately to the core NetWorker services.

Both the NetWorker Management Console (NMC) server (typically the “gst” processes) and LLM reside in the control zone – that is, they exist to service multiple datazones. However, in the same way that many sites end up running the datazone and control zone (via NMC/GST) on the same backup server, there’s nothing preventing you from using LLM to manage licenses separately to the core NetWorker services.

Making this transition is relatively straight forward, and I’ll save doing an article on that aspect unless people would like to see one – instead, I’d like to discuss the pros and cons of having licenses outside of NetWorker but still referenced by a NetWorker server.

Advantages of LLM

It’s best to first understand what LLM brings to you. I’ll use the following keys beside the advantages to let you know where they’re applicable:

- (D+) – Useful for multiple datazones.

- (1D) – Useful for single datazones.

- (M) – Marketing advantage; touted as a bonus by EMC/Legato, rarely if ever used.

So, some of the advantages are:

- (D+) Licenses may be purchased and installed in a central location.

- (D+) Licenses may be reallocated between NetWorker servers as resource requirements/capabilities change (i.e., released from one server, and snapped up by another).

- (1D/D+) License authorisation codes are tied to the LLM server, not the NetWorker server. Therefore if you’ve got an environment where you’re planning on doing some NetWorker server migrations, you can move your licenses to LLM and not have to repeatedly do host transfers.

- (M) You can buy “bulk” licenses. (E.g., 10 x 5 Client Connection Licenses). This advantage seems to be minimising the number of licenses you need to enter. While this sounds cute in theory, I think it actually adds a layer to license complexity.

- (1D/D+) Licenses reported in NMC show the exact features they are being used for – e.g., instead of showing “NetWorker Server, Network Edition/125” to indicate that the server is licensed for 125 clients, you might see “NetWorker Server, Network Edition/93” to indicate that currently there are 93 client licenses being used.

- (M) LLM is where the full version of NMC is licensed, so you only have to learn one licensing administration system.

Disadvantages of LLM

The advantages of LLM don’t come without some disadvantages too. These are:

- Not all licenses work all the time with LLM. For example, historically there has been ongoing issues with creating dedicated storage nodes in an environment using LLM. Typically it’s been necessary to add both a dedicated and a full storage node license, create the dedicated storage node devices, then delete the full storage node license. (Messy.)

- When working outside of NMC, the command line access to LLM licenses (via lgtolic) is even more esoteric and preposterous to use than nsrcap and nsradmin.

Should you use LLM?

It depends on your site. If you’re a fairly small environment, I can’t see any purpose for switching from NetWorker licensing to LLM; however, if your site has a larger number of clients and is reasonably dynamic in client allocations, LLM may give you that extra easy oversight to justify switching to it even if you’ve only got one datazone in your environment.

Alternatively, if you’re planning to transition your backup server a few times over a shorter period (e.g., migrating from current old hardware to interim hardware to full new hardware), then moving licenses out of NetWorker and into LLM may save you the hassle of getting them re-authorised at each step.

Should your LLM server be considered “production”?

If you’re using LLM, the obvious question that some will ask is – can this just be plonked on any old desktop PC? The answer is no. Well, to qualify that answer a bit – nothing prevents you from doing this except common sense. By all means have this running as a virtual machine somewhere, but it should still be considered a production machine in the same way that a backup server is a production machine: it’s part of support production rather than primary production, but it’s still production.

The first thing this should point out to you is that administrator zones could, if desired, overlap. For instance, in the above diagram we have:

The first thing this should point out to you is that administrator zones could, if desired, overlap. For instance, in the above diagram we have: