When I first mentioned probe based backups a while ago, I suggested that they’re going to be a bit of a sleeper function – that is, I think they’re being largely ignored at the moment because people aren’t quite sure how to make use of them. My take however is that over time we’re going to see a lot of sites shifting particular backups over to probe groups.

Why?

Currently a lot of sites shoe-horn ill-fitting backup requirements into rigid schedules. This results in frequent violations of the best practices approach to backup of Zero Error Policies. Here’s a prime example: for those sites that need to do laptop and/or desktop backups using NetWorker, the administrators are basically resigned on those sites to having failure rates in such groups of 50% or more depending on how many machines are currently not connected to the network.

This doesn’t need to be the case – well, not any more thanks to probe based backups. So, if you’ve been scratching your head looking for a practical use for these backups, here’s something that may whet your appetite.

Scenario

Let’s consider a site where there are group of laptops and desktops that are integrated into the NetWorker backup environment. However, there’s never a guarantee of which machines may be connected to the network at any given time. Therefore administrators typically configure laptop/desktop backup groups to start at say, 10am, on the premise that the most systems are likely to be available at that time.

Theory of Resolution

Traditional time-of-day start backups aren’t really appropriate to this scenario. What we want is a situation where the NetWorker server waits for those infrequently connected clients to be connected, then runs a backup at the next opportunity.

Rather than having a single group for all clients and accepting that the group will suffer significant failure rates, split each irregularly connected client into its own group, and configure a backup probe.

The backup system will loop probes of the configured clients during nominated periods in the day/night at regular intervals. When the client is connected to the network and the probe successfully returns that (a) the client is running and (b) a backup should be done, the backup is started on the spot.

Requirements

In order to get this working, we’ll need the following:

- NetWorker 7.5 or higher (clients and server)

- A probe script – one per operating system type

- A probe resource – one per operating system type

- A 1:1 mapping between clients of this type and groups.

Practical Application

Probe Script

This is a command which is installed on the client(s), in the same directory as the “save” or “save.exe” binary (depending on OS type), and starts with either nsr or save. I’ll be calling my script:

nsrcheckbackup.sh

I don’t write Windows batch scripts. Therefore, I’ll give an example as a Linux/Unix shell script, with an overview of the program flow. Anyone who wants to write a batch script version is welcome to do so and submit it.

The “proof of concept” algorithm for the probe script works as follows:

- Establish a “state” directory in the client nsr directory called bckchk. I.e., if the directory doesn’t exist, create it.

- Establish a “README” file in that directory for reference purposes, if it doesn’t already exist.

- Determine the current date.

- Check for a previous date file. If there was a previous date file:

- If the current date equals the previous date found:

- Write a status file indicating that no backup is required.

- Exit, signaling that no backup is required.

- If the current date does not equal the previous date found:

- Write the current date to the “previous” date file.

- Write a status file indicating that the current date doesn’t match the “previous” date, so a new backup is required.

- Exit, signaling that a backup is required.

- If there wasn’t a previous date file:

- Write the current date to the “previous” date file.

- Write a status file indicating that no previous date was found so a backup will be signaled.

- Exit, signaling backup should be done.

Obviously, this is a fairly simplistic approach, but is suitable for a proof of concept demonstration. If you were wishing to make the logic more robust for production deployment, my first suggestion would be to build in mminfo checks to determine (even if the dates match), whether there has been a backup “today”. If there hasn’t, that would override and force a backup to start. Additionally, if users can connect via VPN and the backup server can communicate with connected clients, you may want to introduce some logic into the script to deny probe success over the VPN.

If you were wanting a OS independent script for this, you may wish to code in Perl, but I’ve hung off doing that in this case simply because a lot of sites have reservations about installing Perl on Windows systems. (Sigh.)

Without any further guff, here’s the sample script:

preston@aralathan ~

$ cat /usr/sbin/nsrcheckbackup.sh

#!/bin/bash

PATH=$PATH:/bin:/sbin:/usr/sbin:/usr/bin

CHKDIR=/nsr/bckchk

README=`cat <<EOF

==== Purpose of this directory ====

This directory holds state file(s) associated with the probe based

laptop/desktop backup system. These state file(s) should not be

deleted without consulting the backup administrator.

EOF

`

if [ ! -d "$CHKDIR" ]

then

mkdir -p "$CHKDIR"

fi

if [ ! -f "$CHKDIR/README" ]

then

echo $README > "$CHKDIR/README"

fi

DATE=`date +%Y%m%d`

COMPDATE=`date "+%Y%m%d %H%M%S"`

LASTDATE="none"

STATUS="$CHKDIR/status.txt"

CHECK="$CHKDIR/datecheck.lck"

if [ -f "$CHECK" ]

then

LASTDATE=`cat $CHKDIR/datecheck.lck`

else

echo $DATE > "$CHECK"

echo "$COMPDATE Check file did not exist. Backup required" > "$STATUS"

exit 0

fi

if [ -z "$LASTDATE" ]

then

echo "$COMPDATE Previous check was null. Backup required" > "$STATUS"

echo $DATE > "$CHECK"

exit 0

fi

if [ "$DATE" = "$LASTDATE" ]

then

echo "$COMPDATE Last backup was today. No action required" > "$STATUS"

exit 1

else

echo "$COMPDATE Last backup was not today. Backup required" > "$STATUS"

echo $DATE > "$CHECK"

exit 0

fi

As you can see, there’s really not a lot to this in the simplest form.

Once the script has been created, it should be made executable and (for Linux/Unix/Mac OS X systems), be placed in /usr/sbin.

Probe Resource

The next step is, within the NetWorker, to create a probe resource. This will be shared by all the probe clients of the same operating system type.

A completed probe resource might resemble the following:

Configuring the probe resource

Note that there’s no path in the above probe command – that’s because NetWorker requires the probe command to be in the same location as the save command.

Once this has been done, you can either configure the client or the probe group next. Since the client has to be reconfigured after the probe group is created, we’ll create the probe group first.

Creating the Probe Groups

First step in creating the probe groups is to come up with a standard so that they can be easily identified in relation to all other standard groups within the overall configuration. There are two approaches you can take towards this:

- Preface each group name with a keyword (e.g., “probe”) followed by the host name the group is for.

- Name each group after the client that will be in the group, but set a comment along the lines of say, “Probe Backup for <hostname>”.

Personally, I prefer the second option. That way you can sort by comment to easily locate all probe based groups but the group name clearly states up front which client it is for.

When creating a new probe based group, there are two tabs you’ll need to configure – Setup and Advanced – within the group configuration. Let’s look at each of these:

Probe group configuration – Setup Tab

You’ll see from the above that I’m using the convention where the group name matches the client name, and the comment field is configured appropriately for easy differentiation of probe based backups.

You’ll need to set the group to having an autostart value of Enabled. Also, the Start Time field does have relevance exactly once for probe based backups – it still seems to define the first start time of the probe. After that, the probe backups will follow the interval and start/finish times defined on the second tab.

Here’s the second tab:

Probe Group - Advanced Tab

The key thing on this obviously is the configuration of the probe section. Let’s look at each option:

- Probe based group – Checked

- Probe interval – Set in minutes. My recommendation is to have each group a different number of minutes. (Or at least reduce the number of groups that have exactly the same probe interval.) That way over time as probes run, there’s less likelihood of multiple groups starting at the same time. For instance, in my test setup, I have 5 clients, set to intervals of 90 minutes, 78 minutes, 104 minutes, 82 minutes and 95 minutes*.

- Probe start time – Time of day that probing starts. I’ve left this on the defaults, which may be suitable for desktops, but for laptops where there’s a very high chance of machines being disconnected of a night time, you may wish to start probing closer to the start of business hours.

- Probe end time – Time of day that NetWorker stops probing the client. Same caveats as per the probe start time above.

- Probe success criteria – Since there’s only one client per group, you can leave this at all.

- Time since successful backup – How many days NetWorker should allow probing to run unsuccessfully before it forcibly sets a backup running. If set to zero it will never force a backup running. I’ve actually changed, since I took the screen-shot, that value, and set it to 3 on my configured clients. Set yours to a site-optimal value. Note that since the aim is to run only one backup every 24 hours, setting this to “1” is probably not all that logical an idea.

(The last field, “Time of the last successful backup” is just a status field, there’s nothing to configure there.)

If you have schedules enforced out of groups, you’ll want to set the schedule up here as well.

With this done, we’re ready to move onto the client configuration!

Configuring the Client for Probe Backups

There’s two changes required here. In the General tab of the client properties, move the client into the appropriate group:

Adding the client to the correct group

In the “Apps & Modules” tab, identify the probe resource to be used for that client:

Configuring the client probe resource

Once this has been done, you’ve got everything configured, and it’s just a case of sitting back and watching the probes run and trigger backups of clients as they become available. You’ll note, in the example above, that you can still use savepnpc (pre/post commands) with clients that are configured for probe backups. The pre/post commands will only be run if the backup probe confirms that a backup should take place.

Wrapping Up

I’ll accept that this configuration can result in a lot of groups if you happen to have a lot of clients that require this style of backup. However, that isn’t the end of the world. Reducing the number of errors reported in savegroup completion notifications does make the life of backup administrators easier, even if there’s a little administrative overhead.

Is this suitable for all types of clients? E.g., should you use this to shift away from standard group based backups for the servers within an environment? The answer to that is a big unlikely. I do really see this as something that is more suitable for companies that are using NetWorker to backup laptops and/or desktops (or a subset thereof).

If you think no-one does this, I can think of at least five of my customers alone who have requirements to do exactly this, and I’m sure they’re not unique.

Even if you don’t particularly need to enact this style of configuration for your site, what I’m hoping is that by demonstrating a valid use for probe based backup functionality, I may get you thinking about where it could be used at your site for making life easier.

Here’s a few examples I can immediately think of:

- Triggering a backup+purge of Oracle archived redo logs that kick in once the used capacity of the filesystem the logs are stored on exceed a certain percentage (e.g., 85%).

- Triggering a backup when the number of snapshots of a fileserver exceed a particular threshold.

- Triggering a backup when the number of logged in users falls below a certain threshold. (For example, on development servers.)

- Triggering a backup of a database server whenever a new database is added.

Trust me: probe based backups are going to make your life easier.

—

* There currently appears to be a “feature” with probe based backups where changes to the probe interval only take place after the next “probe start time”. I need to do some more review on this and see whether it’s (a) true and (b) warrants logging a case.

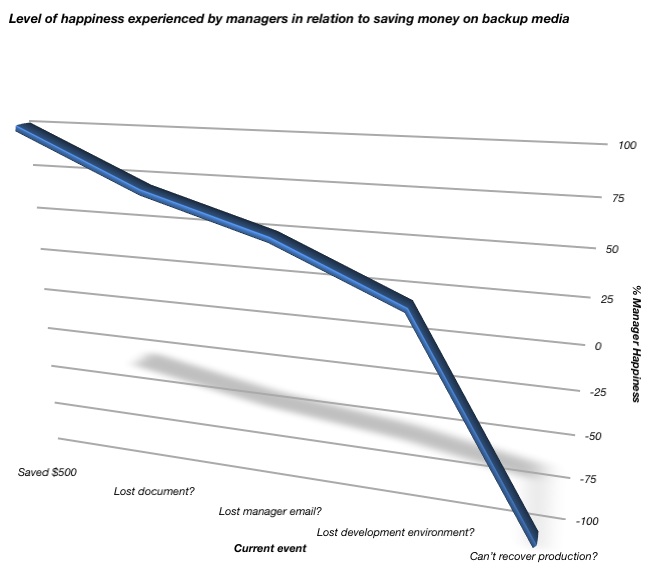

You see, it’s easy to be happy about saving a few dollars here and there on backup media in the here and now, when your backups are running and you don’t need to recover.

You see, it’s easy to be happy about saving a few dollars here and there on backup media in the here and now, when your backups are running and you don’t need to recover.

The first thing this should point out to you is that administrator zones could, if desired, overlap. For instance, in the above diagram we have:

The first thing this should point out to you is that administrator zones could, if desired, overlap. For instance, in the above diagram we have: